Various types of data are continuously generated and require a swift response.

When data has these characteristics, you may need to perform data streaming, as it allows you to constantly route the data to the relevant downstream systems in—or near—real-time.

We’ll help you leverage data streaming by covering everything you need to know. This includes what it is, the different forms it can take, and the situations that call for its use.

Stream your data with Workato

Learn how Workato’s PubSub connector can help you stream data by scheduling a demo with one of our automation experts.

What is data streaming?

It’s a method of collecting information from source systems and routing it to the appropriate downstream systems in, or near, real-time. This process typically involves a data streaming service, and the data that’s “streamed” is collected and routed continuously.

Related: What is API integration?

Types of data streaming

Data streaming typically comes in one of two forms:

Event streaming

When an event occurs in a source system, a message can be produced. The event streaming platform can then take this message and route it to the appropriate downstream systems. Moreover, since these events occur regularly, the event streaming platform continuously feeds the downstream systems new messages.

For example, let’s say that a store uses a point-of-sale (PoS) system for managing transactions. Once a transaction occurs (and it’s converted into an event), various details around that transaction are collected, including the purchase date, the transaction amount, the store’s location, etc. This information is turned into a message that a streaming provider can collect; in turn, the streaming provider can pass the information along to relevant downstream systems, like an inventory system and an accounting system.

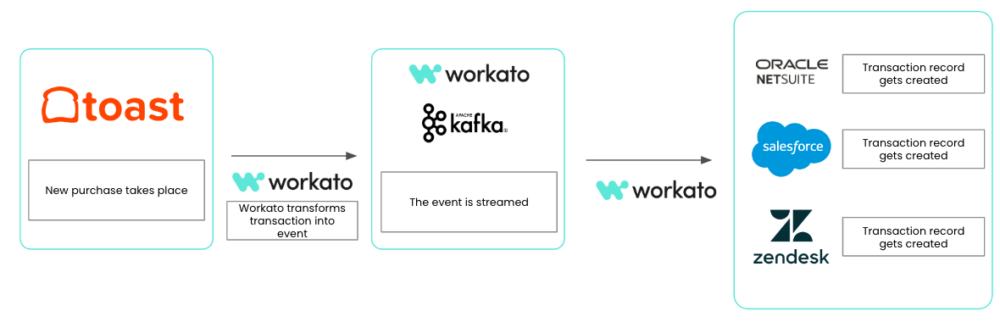

An enterprise automation platform can play an important role in event streaming use cases. In our example, the platform can transform transactions into events and then go on to feed these events to the streaming platform. Moreover, the enterprise automation platform can perform actions in downstream systems. For instance, you can leverage an enterprise automation platform’s low/no-code UX and pre-built connectors to easily connect with an ERP system like NetSuite, a CRM like Salesforce, and an ITSM tool like Zendesk. From there, you can build workflows where if an event in the streaming platform is a transaction, the enterprise automation platform creates a new transaction record in each of those applications.

Here’s how it can all look, assuming you use Toast as your PoS, Workato as your enterprise automation platform, either Workato or Apache Kafka as your data streaming service, and NetSuite, Salesforce, and Zendesk as your downstream applications:

File streaming

It’s when a file streaming platform takes a certain amount of records from a file at a specific cadence and feeds those records to an enterprise automation platform. From there, the data is processed and the appropriate actions take place in downstream systems.

For example, let’s imagine you have an on-prem CSV file of customer records that you want to move to your data warehouse. You can use a file streaming platform to move records from that file to the enterprise automation platform at a steady cadence, and as each record gets added, the enterprise automation platform creates the corresponding record in the data warehouse (e.g. Snowflake).

Related: What is a managed file transfer solution? Here’s how it works

When to use data streaming

Aside from the scenarios introduced at the beginning—data is time-sensitive and is continuously generated—, it also makes sense to adopt data streaming when you’re dealing with a relatively complex data architecture. In other words, as you use more source and destination systems, you’re more likely to need a data streaming approach.

For example, if you have a dozen downstream systems that need to collect the transactions from your PoS, you’ll need a data streaming platform to route the transaction events to these downstream systems. Otherwise, you’re tasked with building a sync between the PoS and each downstream system, which is both overwhelming to implement and manage.

Related: How to synchronize data across systems

Use Workato to stream data—and much more

Workato, the leader in enterprise automation, offers a PubSub connector that allows you to generate messages and build automations that trigger once any gets published. Our low-code/no-code platform also offers:

- Pre-built connectors to hundreds of applications as well as hundreds of thousands of automation templates so that your teams can integrate and automate at scale

- A customizable platform bot, Workbot, that allows you to create custom applications in your business communications platform, whether that’s Slack or Microsoft Teams

- Enterprise-grade governance and security through features like role-based access control and activity audit logs