Introduction

Today, forward-thinking technology leaders are shifting priorities from pilot projects to product-grade, value-driven implementations. The word is out: the discussion is no longer around whether to integrate AI but how to do it effectively, securely, and at scale. In a webinar hosted by Workato, “How Technology Leaders Scale AI Impact with RAG and LLMs,” attendees got an inside look at how organizations scale the impact of AI through Retrieval-Augmented Generation (RAG), large language models (LLMs), and the Workato orchestration platform. Read on to discover key insights and strategies that IT leaders can take away to guide their AI roadmap.

The Evolution of Enterprise Platforms

The traditional IT challenge has been navigating the complex interplay between integration and automation. Workato was founded on the belief that these should not be siloed. Instead, modern enterprises require a unified orchestration layer—one that manages people, processes, and data seamlessly across the entire organization.

With the rise of GenAI, this orchestration layer becomes even more vital. AI increases the surface area for orchestration, allowing businesses to infuse intelligence into previously rigid workflows. Whether it’s internal operations or customer-facing systems, LLMs are now being treated like utilities—callable services integrated directly into enterprise processes via APIs.

AI in the Orchestration Stack

At this moment, AI is being embedded throughout the orchestration stack in a couple of core areas:

- Foundational Integration, where AI powers the backend of integration workflows, including data movement, enrichment, and validation.

- Operational Recipes, where AI enhances pre-built “recipes” by helping generate, categorize, and summarize data in real-time.

- Agentic Applications, and that enterprises are now layering LLMs and domain-specific agents on top of their orchestration frameworks, enabling intelligent decision-making and action automation.

It’s clear that AI is no longer a bolt-on feature; it’s grown into a foundational layer across the orchestration stack. Enterprises are embedding AI at every level to create dynamic, adaptive systems that can learn, reason, and act.

The Power of Agentic AI

Within Workato’s framework, there are three specific pillars that define agentic AI:

- Contextual Knowledge Base: It’s imperative that agents have access to relevant, structured knowledge tailored to the business domain. This requires ingesting data from multiple sources – documents, internal wikis, and system logs- and vectorizing it into searchable embeddings.

- Operational Guidance: Guardrails and finely-engineered prompts transform generic LLMs into business domain experts. These system instructions guide agents on how to behave, handle ambiguity, and make company-policy-aligned decisions.

- Executable Skills: Unlike chatbots, agentic systems must do things, not just answer questions. Workato enables agents to invoke pre-defined skills that connect to external systems and apps like Jira, Okta, and hundreds of others to take action.

Building a RAG-Powered AI Assistant

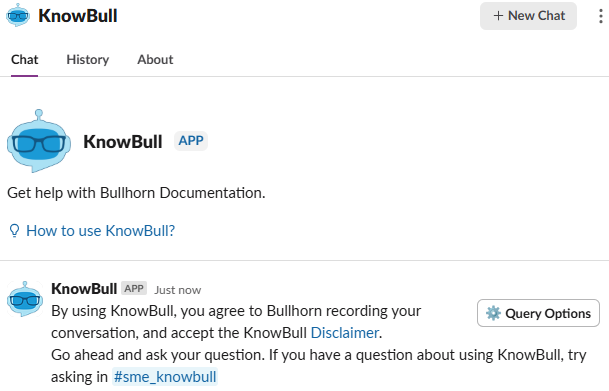

What does agentic AI look like in action? Amidst economic pressure and increasing customer demands, Bullhorn, a global leader in staffing and recruitment, undertook a massive transformation to support its customer success team with a GenAI-powered assistant. Their overarching goal was to deliver faster, more accurate answers across a complex product suite. Bullhorn built an AI knowledge assistant, KnowBull, with both Slack and web interfaces.

This assistant was built on the foundation of a RAG application that indexed internal knowledge and allowed new agents to retrieve instant answers across a wide range of topics. While initially developed with custom Python scripts and embedding pipelines, the assistant relied on vector databases and chunking strategies to break down documentation into machine-readable units. While this process resulted in a functional tool, this approach was difficult and impractical to scale and maintain.

To streamline this process, Bullhorn’s team transitioned over to Workato, leveraging its orchestration capabilities to build a reusable ingestion pipeline. This significantly accelerated the time-to-deployment for new data sources while simultaneously adhering to strict data privacy policies by using on-prem agents and internal LLMs.

The final solution was a layered architecture featuring:

- Data Ingestion Module: Integrated with enterprise systems like Confluence, it supported metadata management, content deduplication, and format normalization.

- Embedding Layer: Open-source models like GritLM-7B were used for chunking and embedding, balancing performance with inference speed.

- Orchestration with Workato: Recipes were built for repeatable ingestion, enabling engineers to onboard new domains like HR or DevOps documentation with minimal friction.

- Private Vector Store & LLMs: To meet strict security standards, the entire inference pipeline—including embeddings and model calls—ran within the enterprise’s private cloud.

Comparative analysis showed that the Workato-based pipeline delivered cleaner, more consistent results compared to the earlier custom Python-based solution.

Broader Impact and Future Expansion

With Bullhorn’s proof of concept becoming a resounding success, the organization began retiring its legacy ingestion scripts and exploring new domain-specific applications, including:

- Incident Management, where Bullhorn is now using AI to analyze machine logs for root cause detection.

- HR Policy Bots, where these bots offer contextual guidance on internal policies through self-service AI.

- Observability & Monitoring, which surfaces insights from telemetry data to IT admins through conversational interfaces.

This modular architecture allows each new domain to be spun up quickly, enabling scalable experimentation across the enterprise.

AgentX and Pre-Built AI Agents

In order to further support customers in accelerating their AI journey, Workato recently rolled out AgentX apps, which are domain-specific, pre-built agentic applications. They can be used in areas like:

- IT provisioning

- HR onboarding

- Finance approvals

- Sales enablement

For example, an IT agent might automatically check access policies, generate tickets in Jira, and provision apps in Okta—all through natural language conversations in Slack or Teams.

By combining LLMs with predefined orchestration logic, these agents can deflect support tickets, enhance user productivity, and reduce operational costs.

Key Takeaways for IT Leaders

- Start with High-Value Use Cases: Start with domains that are knowledge-intensive but not mission-critical, like internal support, documentation, or onboarding.

- Design for Modularity: Build reusable ingestion and embedding pipelines that can scale across multiple departments.

- Don’t Skip Security: If your enterprise has strict policies, ensure your orchestration platform can work with on-prem models and vector stores.

- Embrace Multi-Agent Systems: Delegate tasks across specialized agents with their own knowledge bases and skills to create a distributed AI workforce.

- Focus on Orchestration, Not Just AI: True impact comes when AI is paired with execution—taking actions across connected systems, not just generating insights.

The Road Ahead

The journey from experimentation to AI-first operations doesn’t happen overnight. It requires rethinking architecture, investing in orchestration, and embracing GenAI’s creative and operational potential.

Workato’s vision is to empower enterprises with the tools to build intelligent, agentic systems—where AI isn’t just an enhancement, but an embedded, productive member of the digital workforce.

See how Workato can help you orchestrate GenAI at scale — schedule a demo today.