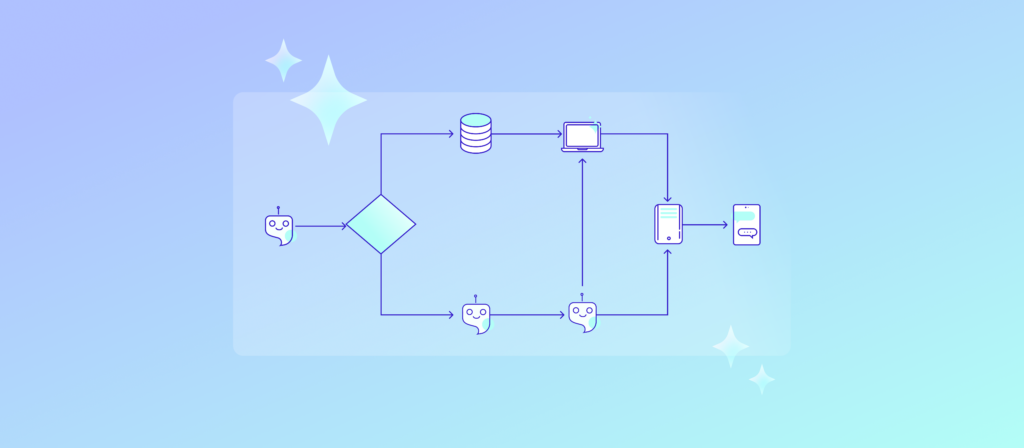

Unlike traditional software, AI agents are capable of acting independently, setting and pursuing their own goals, making decisions, and interacting with APIs, cloud services, and internal data. While this autonomy increases productivity and operational efficiency, it also introduces new security challenges organizations need to understand and manage.

Securing agentic AI is a business-critical concern, and as businesses investigate these technologies, understanding the risks, mitigation strategies, and governance frameworks is critical for safeguarding sensitive data, preventing operational errors, and maintaining trust in AI-driven processes.

What Is AI Agent Security?

AI agent security is the process of protecting autonomous agents from being misused, making mistakes, or being attacked. These agents are non-deterministic, which means that depending on the situation, past interactions, and their own reasoning processes, they may respond differently to the same input.

This flexibility makes them stronger and more adaptable than regular software, but it also makes them less predictable.

If you gave a new employee access to sensitive data, the ability to change enterprise records, and tools that could interact with the outside world, you would naturally be concerned about oversight, permissions, and potential errors.

Agents work in much the same way. They can read, write, and act across systems, making security and governance critical from the start.

The Agentic AI Threat Landscape

AI agent security focuses on preventing three major types of risk.

1. Data Security Concerns

One of the most immediate risks of agentic AI is that it increases the potential of data leakage. Agents with access to proprietary or confidential information can inadvertently disclose data — especially if users interact with agents without appropriate access controls.

Unlike humans, agents lack an inherent sense of confidentiality, which means that they will provide information based on the prompts they receive, regardless of sensitivity, unless appropriate systems are in place.

Businesses mitigate this risk by using strong permission checks, least privilege principles, and secure retrieval-augmented generation, among other tactics. These measures make sure that agents only see and share information that is relevant to the user or the situation.

For example, an agent whose job is to summarize internal financial data should never be able to send private reports externally without explicit approval.

2. Unintended Actions

Agents can act in unpredictable ways — even when given the same instructions. This nondeterministic behavior can result in unintended actions, which range from minor operational errors to critical mistakes.

For example:

- An agent with email-sending privileges may accidentally message the wrong recipient.

- An agent with the ability to delete or modify data may misinterpret instructions and remove more records than intended.

- If a financial reporting agent is not monitored, sensitive projections may be exposed.

These risks highlight the importance of safety precautions and oversight. Enterprises must monitor and control agent actions in the same way that a manager would supervise a new employee performing high-risk tasks, particularly when high-impact tools or sensitive data are involved.

3. Manipulation by Attackers

Adversarial interference is a growing concern for agentic AI systems. Prompt injection, data poisoning, and compromised external inputs can all be used to trick the system into acting in ways that were never really intended.

The consequences of this manipulation could potentially be severe, particularly when agents have access to sensitive systems or tools. Security teams must consider these risks when designing workflows so that agents are resilient against malicious prompts and tampered data.

It’s also worth considering how quickly the attack surface grows in agentic environments. Every integration point — APIs, cloud services, and third-party connectors — creates another opportunity for something to go wrong, especially as agents operate more independently across multiple systems.

Security strategies must evolve to accommodate the diverse contexts and unpredictable interactions that come with deploying agentic AI in the real world.

How AI Agent Security Works

Combining technical controls, process governance, and human oversight is necessary for effective AI agent security. Here are some tactics you can use to secure your agentic workflows.

1. Authorization and Permissions

Agents should only access data and tools that are appropriate for the user or context they serve. Access should be restricted according to the least privilege principle so that agents can’t carry out high-impact actions without receiving prior authorization.

2. Tool Access Management

Enterprises must assess the risks of each tool available to agents. To do this, ask these questions:

- Should the agent have delete privileges?

- Can the agent communicate externally?

- Does it have access to sensitive databases?

Limiting capabilities to what is required reduces the possibility of unintended or destructive actions.

3. Guardrails and Human-in-the-Loop

For very important or potentially dangerous operations, people need to be in charge and have the final say. Agents can suggest actions, but in the end, a human with human judgment must approve them before they are carried out. This approach lowers risk in theory while still letting agents get work done.

4. Monitoring, Explainability, and Incident Response

Logging in the traditional sense might not be enough for agentic systems. Security teams need tools that let them follow an agent’s actions to find out why they made certain choices. Explainability frameworks help security analysts and auditors look at how agents act, find unusual behavior, and deal with incidents in a timely manner.

Advanced monitoring should let you keep an eye on more than one agent at a time, keep tabs on new behaviors, and make sure that everyone is responsible for their own decisions.

Getting Started With Securing AI Agents

For enterprises just beginning to implement agentic AI, practical steps to getting started include:

Define the Scope of Agent Privileges

The security process for enterprises starting to implement agentic AI begins with the scope definition of each agent’s privileges. Broadly, this means to decide which tools, data sources, and systems agents should interact with and resist the temptation to grant broad, unnecessary access.

Implement Access Controls

From there, you need to implement accurate access controls. A great way to do that is by using an identity and access management tool (IAM) that will allow you to enforce authorization policies, manage credentials, and make sure that permissions adjust based on context.

Establish Guardrails for Critical Actions

It’s very important to set guardrails around high-impact actions. We mentioned earlier in the post that any operation that could potentially cause significant harm if executed incorrectly should route through a human for approval.

Plan for Monitoring and Auditing

Monitoring and auditing should be implemented as a foundational capability. Organizations need a way to log agent decisions, track outcomes, and detect unusual behavior early on so that investigations can begin before problems escalate.

Anticipate Dynamic Behavior

Since agents adapt over time, businesses should prepare for this dynamic behavior. Security and governance systems must be adaptable, able to evolve with agents as new behaviors and threats emerge.

Where MCP Fits In

Many companies are turning to the Model Context Protocol (MCP) to help agents interact with business systems in a structured and predictable way, especially as they start to take agent deployment seriously in production environments.

Agents rely on tools, APIs, and data sources; MCP introduces a standardized layer between them. Instead of letting the agent touch these systems directly, MCP forces every interaction to go through a defined contract that specifies what the agent can do, what inputs it must provide, and what outputs it should expect.

This turns agent actions into controlled, auditable steps rather than open-ended API calls.

By limiting access, enforcing permissions, and providing clear visibility into every operation, MCP reduces the likelihood of accidental overreach, protects against malicious prompts, and gives security teams the confidence that agents are operating within approved boundaries.

Read everything you need to know about MCP in this complete guide.

Secure Your Agentic AI Workflows Today

Agentic AI provides transformative benefits, but it also poses fundamentally new security challenges. Enterprises must reconsider traditional security frameworks, tailoring authorization, monitoring, and governance to the dynamic, autonomous, and distributed nature of these systems.

For enterprises investigating structured governance of agentic AI, Workato’s Model Context Protocol (MCP) and related resources provide frameworks for managing agent access, tool permissions, and security at scale.

With Workato, organizations can reap the productivity and efficiency benefits of agentic AI while minimizing risks to data, operations, and business integrity by carefully designing, monitoring, and governing AI agents. It’s an easy way to unlock the potential of the powerful new technology without putting your business at risk.

This post was written by Alex Doukas. Alex’s main area of expertise is web development and everything that comes along with it.