It’s never been more important to have connected, flexible system architecture.

AI is reshaping the role of APIs, molding them into enablers of contextual reasoning and intelligent automation. More than ever, developers and technology architects feel the need for composable systems that can adapt quickly, control the flow of data to AI agents, and orchestrate agent behavior.

The enterprise model context protocol (MCP) is an emerging protocol that standardizes how AI can communicate with enterprise assets such as APIs, and one that fits neatly into the orchestration layer of your composable infrastructure.

MCP, and APIs, are the new building blocks of enterprise intelligence. APIs power the flow of data across systems, and MCP gives that flow direction and meaning, helping AI make sense of it all. Together, they turn your orchestration layer into an intelligence engine you can scale across the enterprise.

Rigid vs. composable API architecture

Experience, process, systems.

This three-tiered API model was once the blueprint for scaling connectivity. However, many organizations who fanatically followed the model now find themselves trapped in over-engineered systems. Rigid adherence to this model can lead to ballooning costs, sluggish delivery, and limited reuse.

A composable approach is completely different. It’s a shift from rigid, static integration models to dynamic, modular architectures that support rapid assembly, reassembly, and orchestration of business capabilities. This architecture is better prepared for AI, as AI doesn’t need new, complex sets of APIs to fit into a composable system. Instead, AI models can be added as just more building blocks in the system.

It’s simple. APIs should serve the architecture, not the other way around. The real goal is agility and how fast an organization can orchestrate and evolve its system capabilities to meet new demands.

When AI is powered by integration and automation in a composable framework, it unlocks new levels of speed, efficiency, and impact, turning disconnected tools into a unified engine for transformation.

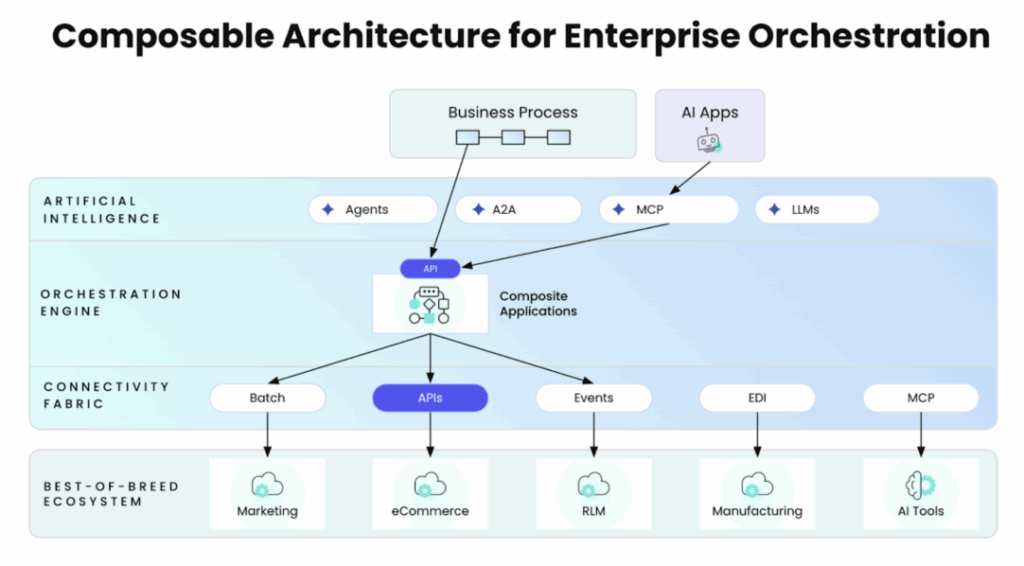

Composable architecture for enterprise orchestration

Enterprise orchestration connects and automates processes, data, and workflows across your systems, applications, and services. It’s the layer above individual systems, which manages the interactions between microservices, APIs, and data pipelines. It ensures workflows are executed in the correct sequence, dependencies are managed effectively, and data is synchronized across platforms.

Workato’s enterprise orchestration platform enables AI composability, through capabilities like:

- Model context protocol (MCP), the intelligence layer enabling agents and automations to understand, reason, and take action with enterprise data

- AI agents or “Genies,” intelligent digital workers that execute complex workflows autonomously

- AI workflows, orchestrations that leverage artificial intelligence to process data and augment your workflows

- AI and ML model integration, integrations with cloud AI/ML platforms that let developers embed model predictions and classifications directly into workflows

- API Management (APIM), to create, manage, and secure internal and external APIs with ease

Here’s how AI and APIs interact in the enterprise orchestration layer of a composable system:

With this approach, builders can package existing APIs as modular, reusable agent tools, what Workato calls “skills.” By using “recipes,” users can orchestrate APIs, events, and business logic into workflows that are inherently adaptable and context-aware—qualities essential for AI agents, workflows, and integration.

At the core of this progression is the MCP, which standardizes connections between LLMs and APIs. MCP provides a unified environment where agent skills—built from APIs, recipes, or events—are registered, managed, and orchestrated. The framework allows organizations to ingest existing APIs and package them as agent skills, and eliminates the need to build agentic capabilities from scratch.

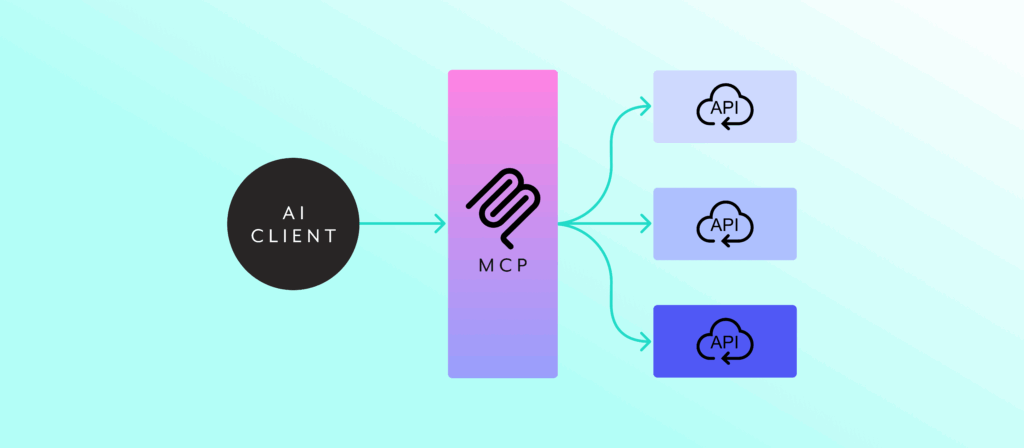

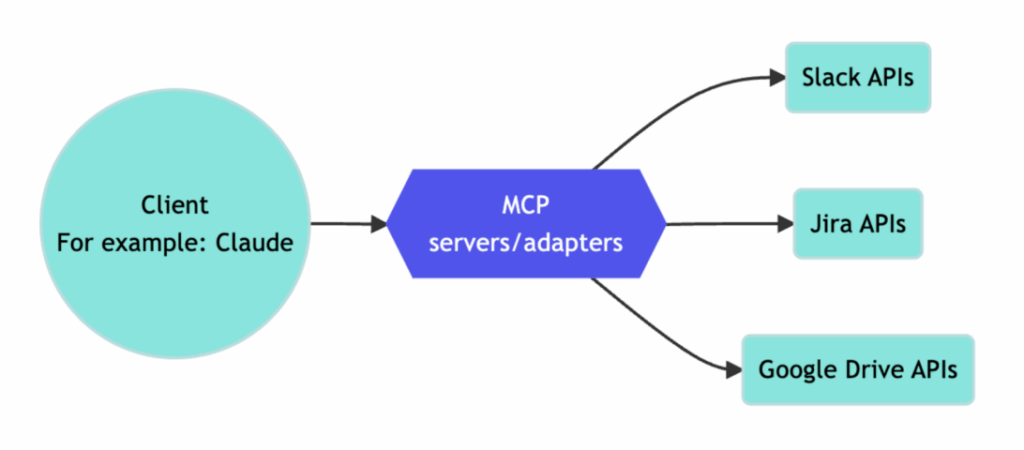

Here’s a visualization of how MCP bridges the gap between the AI model (the “client”) and your APIs:

As a bridge between AI and APIs, MCP provides:

- Semantic interoperability by giving structure and meaning to the APIs that models can interact with, making them accessible to agents without extensive retraining or manual configuration.

- Secure reasoning boundaries by defining the guardrails around how models access APIs, ensuring that every action complies with your enterprise governance and security policies.

- Dynamic orchestration by letting agents compose and chain different APIs together to complete tasks, turning APIs into reusable skills that can be called on at appropriate times.

APIs and MCP: the foundation of enterprise intelligence

Your ability to achieve enterprise intelligence depends on how effectively your systems understand context, share information, and act on it. APIs and MCP are the cornerstones that make this possible.

APIs are still the fundamental mechanism for system interoperability and composability. They expose business capabilities, data, and logic in a standardized, consumable way, so that humans and AI can access them in secure, predictable ways. Nothing’s changed here. APIs define what enterprise systems can do, providing the actions, data, and logic that underpin higher-level reasoning and automation.

If APIs define what’s possible, MCP defines how AI interacts with your system capabilities. It is the standardized interface between AI models, APIs, and enterprise data, deciding which applications and data AI agents and LLMs have access to and under what context or permissions it can be used.

Through MCP, enterprises can evolve rigid API-driven connections into context-aware, adaptive orchestration, where AI agents understand intent, reason using relevant data, and act accordingly.

For more, read “The Role of APIs and MCP in Orchestration and Agentic AI” whitepaper.