Java UDFs: Bringing Data Programmability to Your Snowflake Automations in Workato

As businesses invest more in accelerating their journey from data to decisions, there is an increase in usage of Workato for data pipelines to load data into Snowflake, and make insights in Snowflake accessible from business apps.

More and more customers are moving high volumes of data from SaaS, on-prem, and file apps into Snowflake. Additionally, customers are using Workato’s unique trigger capabilities to create automations for pushing actionable insights from Snowflake back into business apps – a trend popularly known as “Reverse ETL”.

As a leading Snowflake technology partner, we have built robust capabilities in the platform in addition to our Snowflake connector. Customers get the ability to combine the power of Workato and Snowflake to create the most optimal data pipelines.

The connector supports schemaless ELT, push-down SQL, and the execution of stored procedures and tasks to use the power of Snowflake. We focused on making the connector easy to use, secure, and deliver the performance customers expect for high throughput operations, and provide access to the latest features in Snowflake for data pipelines. The latest update to our Snowflake connector supports Snowflake’s Java user-defined functions (UDFs), currently in public preview.

As part of this initiative, we’re humbled to be part of Snowflake’s Snowpark Accelerated program featuring partners that support these powerful new features. We’ve seen the power of Snowflake through the usage of the platform in the automation workflows of customers (and our own usage) and are incredibly excited about the new use cases that Snowpark and Java Functions will open up especially when used as part of a Workato recipe.

Utilising Snowflake Java UDFs with Workato

Java UDFs allow developers to build custom and reusable functions that can be leveraged by Snowflake users across the organization. This greatly enhances productivity as it enables data workers to leverage the flexibility of Java and a rich community of libraries to transform and augment data in their pipelines.

To demonstrate how easy it is to do this, we’ve prepared a practical scenario that can be applied to data pipelines built by our clients.

The scenario

If your organization uses Salesforce to manage customer queries and feedback, your Customer Support team might be inundated with Salesforce cases to follow up with daily. It can become challenging to sift through the stream of messages from customers and prioritize efforts towards the most pressing cases.

We can achieve this with Java UDFs and an AI-powered sentiment analyzer to flag critical comments. Thankfully, we will not need to build and train an AI model on our own, as we can leverage excellent work from the Stanford CoreNLP Java Library.

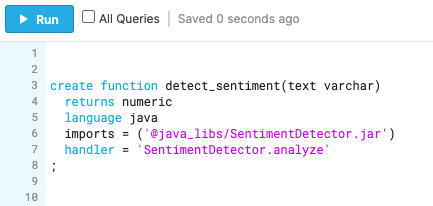

Creating the Java UDF on Snowflake

The above statement creates a Precompiled Java UDF that allows us to use an externally built and compiled Java executable (SentimentDetector.jar). This JAR executable contains an analyze method which takes in a single text argument. It returns an integer between 0 and 4, where 0 is a negative sentiment, and 4 is a positive sentiment.

Learn more about writing Java UDFs in the Snowflake documentation.

The data pipeline

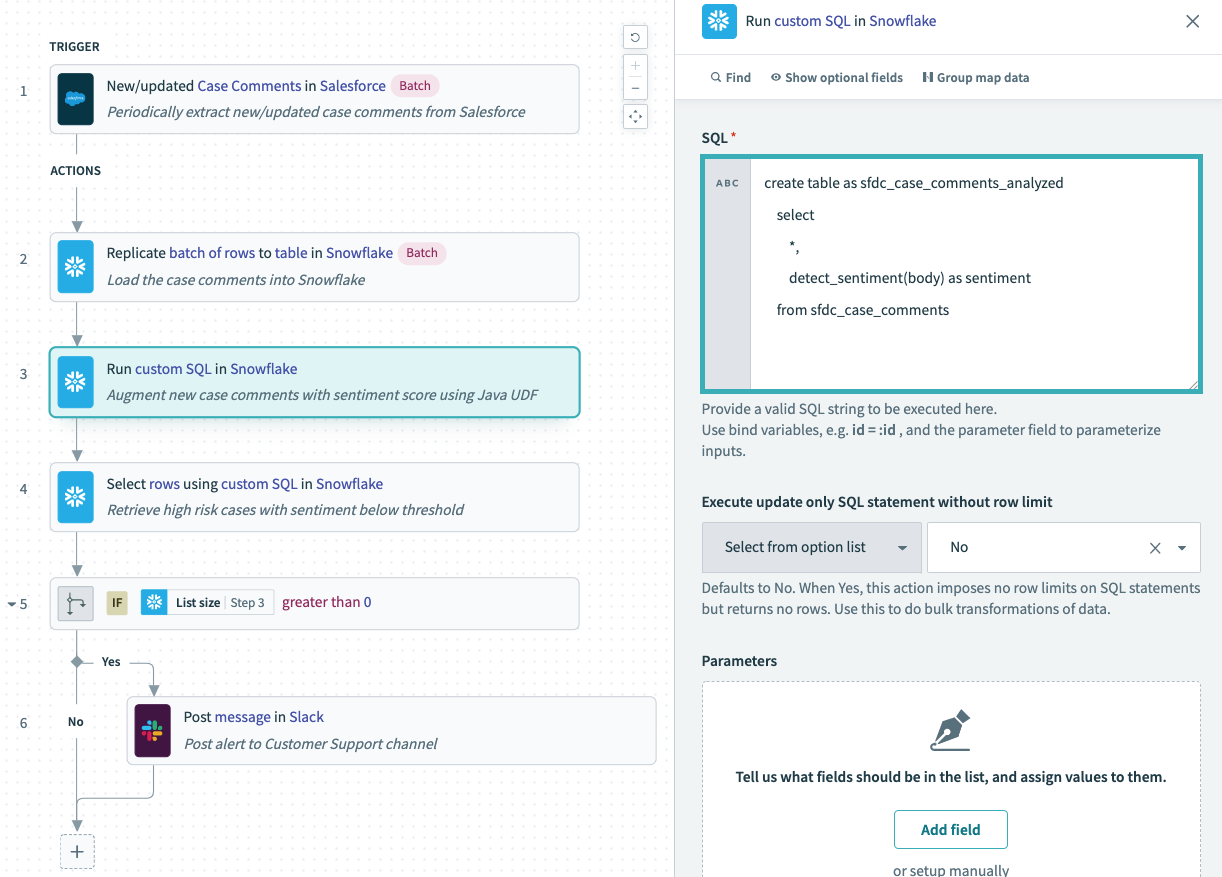

This recipe defines a data pipeline that loads Salesforce Case Comments data incrementally to Snowflake.

Augmenting the data using Java UDF

As with all things Snowflake, triggering our Java UDF involves simply running some SQL. In step 3 of the recipe, we use Workato’s Run custom SQL action work on the case comments data that has landed on Snowflake. A new table sfdc_case_comments_analyzed is created, containing a sentiment column. This new column is populated by executing our detect_sentiment UDF against each case comment’s text content.

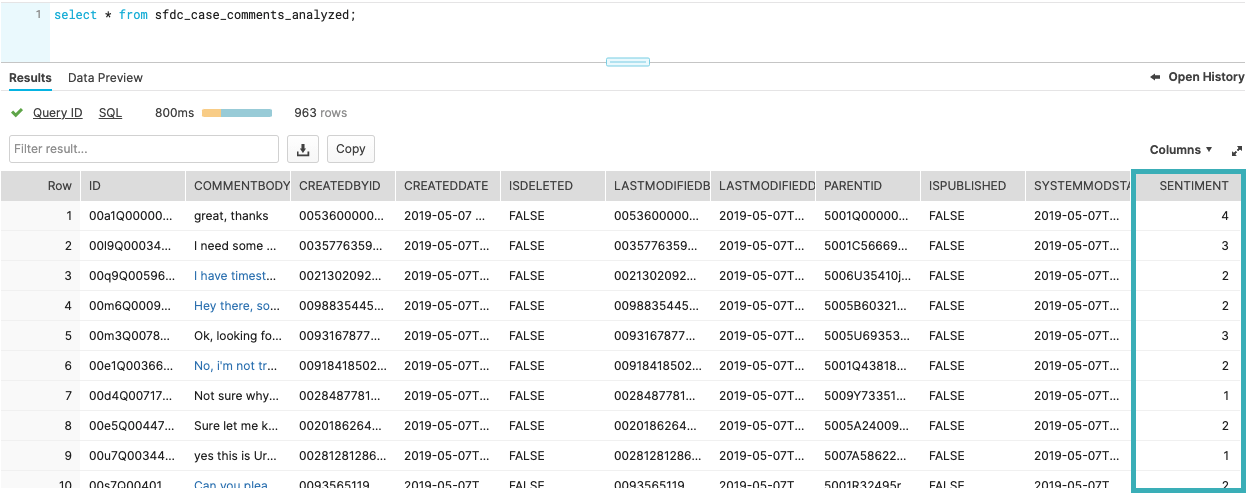

How the augmented data looks in Snowflake

Using Workato to automate the deployment of Java UDFs

As your Java UDFs become more complex, you’ll want to enforce development processes like versioning, code reviews and running automated test suites. However, after passing these quality gates, the process of taking the Java UDF code and deploying it on Snowflake would still be painfully manual.

To make matters worse, your organization might have a team maintaining Java UDFs, and another team owning Snowflake administration. The handoff between teams is friction in the process that costs both time and money.

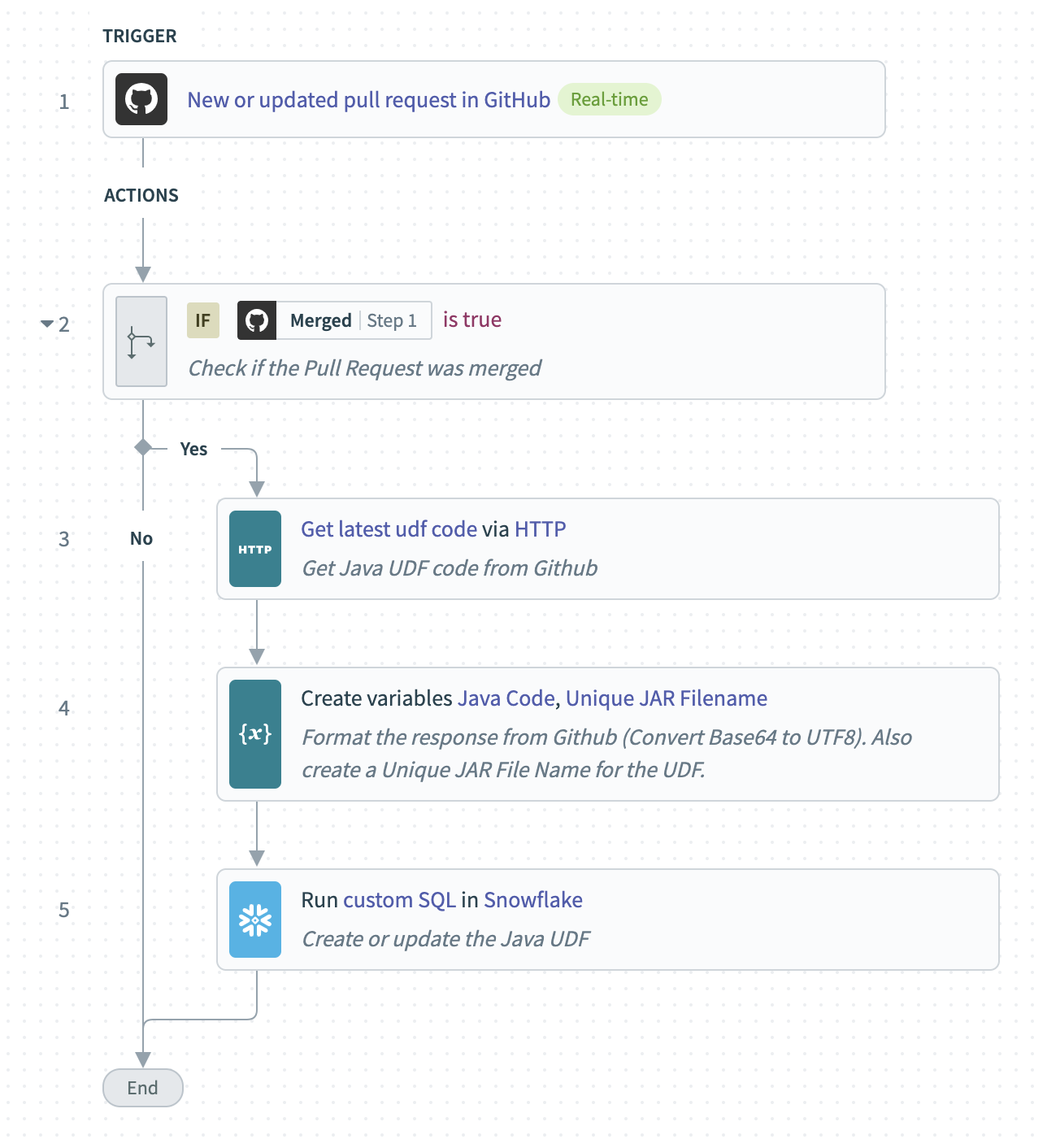

Workato is able to solve this issue and accelerate your Java UDF release process. In the following recipe, we automate the following steps:

- When a Github Pull Request is merged (quality gates passed)

- Get the latest Java UDF code from the Github repository

- Update the Snowflake Java UDF

Note that we are creating Inline Java UDFs for this example.

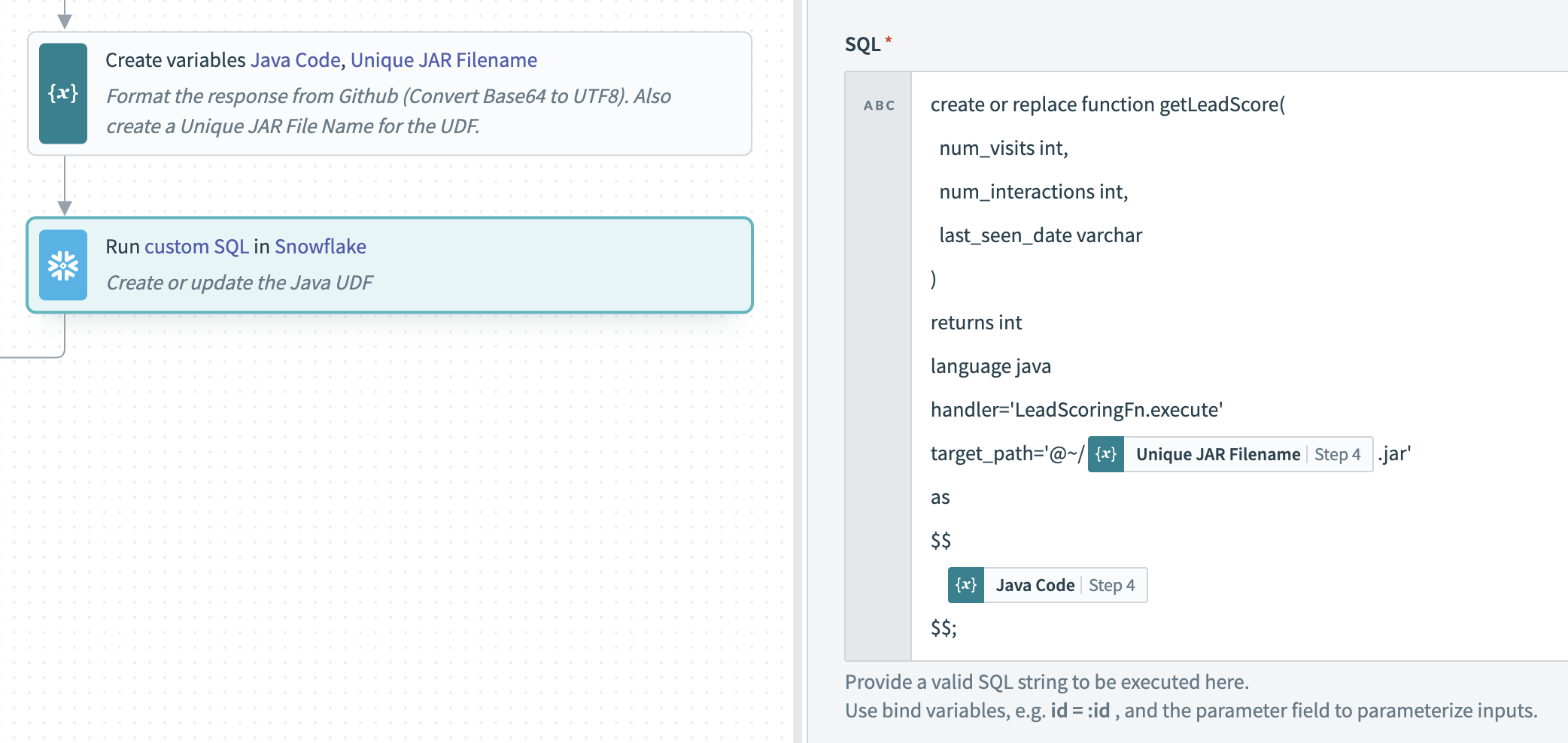

Here’s a closer look at the Java UDF creation step. It defines a getLeadScore function that takes in 3 parameters and returns a Sales Lead’s score (an integer).

The Java Code obtained from Github is injected into the SQL statement. Also, a unique JAR Filename is generated to prevent conflicts in case this UDF was previously created.

All done! We’ve demonstrated how Workato can help to automate the development lifecycle of Snowflake UDFs. While this was a simplistic example for brevity, Workato’s easy-to-use Recipe Editor and thousands of connectors enable it to support any development workflow that you may require.

What’s ahead – Support for Snowpark via Stored Procedures

Snowpark enables Snowflake users to use Scala or Java, in addition to SQL, in building out their data pipelines. Snowpark essentially provides a developer experience for users wanting to define workflows in Scala with all of the associated benefits – type checking, error reporting, etc. Snowpark also automatically converts all of your Scala code into SQL behind the scenes so these operations can run directly inside of Snowflake.

Like Java UDFs, Snowpark can also be utilized from Workato Recipes to create robust fully automated ELT flows with complex data transformations. This capability relies on Java Stored Procedures, which was showcased in the recent Summit 2021 Conference and slated to be released in the near future. We’ll be writing about this in our product blog as it becomes available, so stay tuned!