Product Scoop – April 2024

Process data more effectively with upgrades to FileStorage, SQL Transformations, and database connectors

Workato enables you to build effective data pipelines to extract data from different file servers, applications, or databases within your organization, transform the data, and load it to databases or data warehouses to gather insights that can help to better understand your business and customers.

April brought a wealth of enhancements and additions in the area of data integration, all with efficiency, security, and scalability in mind. All users who have enabled the FileStorage and SQL Transformations connectors will automatically get the new triggers and action updates.

Handle CSV data effectively with FileStorage

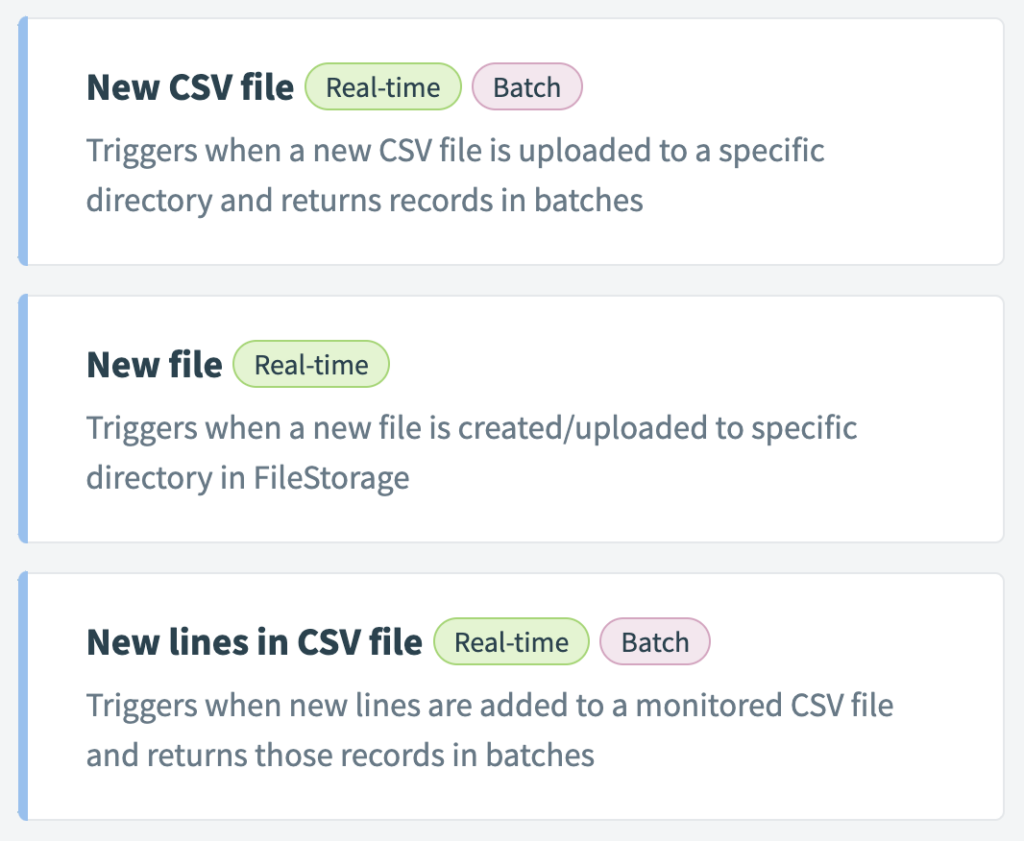

Workato FileStorage allows you to store and manipulate valuable automation data directly within Workato. With new FileStorage actions and triggers, you can extract, store, append, and fetch data from CSV files and be rest assured that the data stored in Workato aligns with the column schema for downstream applications and operations.

These additional operations are pivotal for dealing with challenges such as:

- Data volume mismatches between source and destination: Store the data from source in FileStorage with CSV schema, and use the New CSV file trigger or Get CSV lines in batches action to fetch the data in batches, and send to the destination application.

- Schema safety in data pipeline setup: Fetch data from source application as a CSV file, and store it in FileStorage with the schema. In the load recipe, fetch the data from FileStorage and send it to the warehouse in bulk! Any schema mismatches will be caught during the storing process, so we know the data sent to the warehouse matches the structure we expect.

- Real-time directory monitoring: Instead of polling to see if a new file is added to the directory, use the New file trigger to monitor directories and subdirectories, and take action immediately.

Query data directly from Excel files and Workato data tables in SQL Transformations

With these additional data sources and formats, the SQL Transformations action is now even more adaptable to how you work.

Here’s a few challenges you can tackle with these new formats:

- Data standardization and formatting from different channels: Store the Excel files from the various channels in FileStorage, then use the Query data action in the SQL Transformations connector to configure the worksheets and data ranges to work with, and run a query to standardize!

- Create an aggregated report of data across tables in my Workflow Apps: Use the Query data action in the SQL Transformations connector, and specify each data table you want to join as a data source; then aggregate and join as you wish!

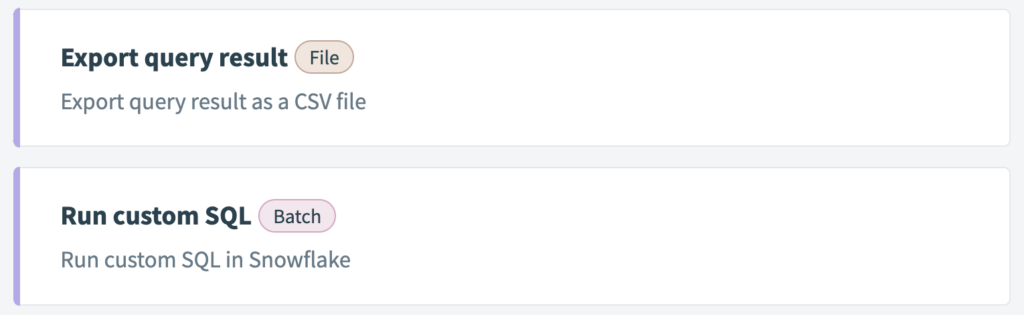

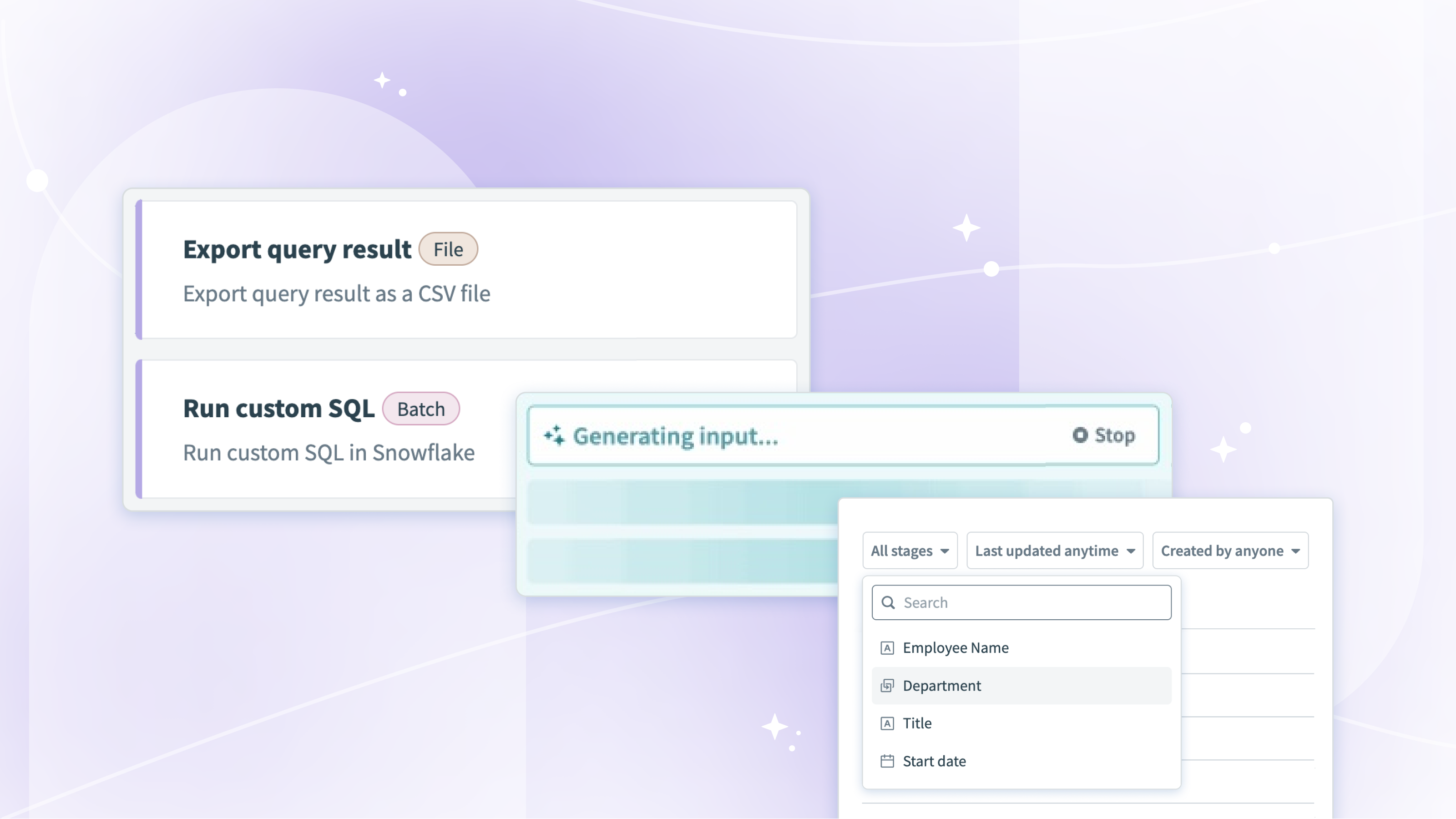

Handle long-running SQL queries and exports

Have you ever run a query or export that timed out? This update is for you! With long actions for databases, we now allow for extended query execution times, with a timeout limit of 120 minutes. Long actions for databases are currently available for Snowflake, PostgreSQL, MySQL, and Oracle.

There are two actions you’ll see in the supported connectors: Run long query using custom SQL, and Export query result. The Export query result action has no limit to the number of rows returned, eliminating the need for pagination logic.

Support for loading with Snowflake’s internal stages

A Snowflake internal stage is a temporary storage location within a Snowflake account that holds data files during certain operations, such as loading data into a table or unloading data from a table.

With the new Upload to internal stage action, and updated Bulk load from stage action, you can upload files to and load files from the internal stage, streamlining data transfer and ingestion, and enabling smoother and more efficient data loading workflows.

Break the formula mode ice with Recipe Copilot

Copilot keeps getting smarter! With this new release, workspaces with Copilot enabled are able to ask Copilot for help generating a formula when recipe building. Copilot evaluates what combination of datapills and/or formulas will best satisfy your request, then displays it to you to review.

Best practices

- Be specific! Give a clear example or context of what you are looking for, such as “I want the value of the amount due from the invoice as an integer”

- Be human! Review what Copilot gives you, and make adjustments to make it work. Your recipe could have thousands of datapills, so Copilot might pull the wrong one in sometimes as it learns.

Wait for response with asynchronous HTTP

With the HTTP connector, our goal is always to provide the most flexible and universal connectivity – with the new long action, you can enjoy a longer timeout period (up to 1 hour), then your job will pick back up after a response is received. If you are working with legacy APIs or shifting to Production-size loads, try toggling Wait for response within your HTTP action to allow for a longer response time.

Wait for resume action with Connector SDK

Universal connectivity updates abound! The Wait for resume action in the connector SDK opens doors and simplifies the recipe build experience. By defining actions that can suspend jobs until an event takes place in another system, a single recipe can handle a workflow start-to-finish.

Examples of this wait for resume action include submitting an invoice, and waiting for approval/rejection before continuing, and sending an email to a prospect, then waiting for it to be opened before moving to the next action.

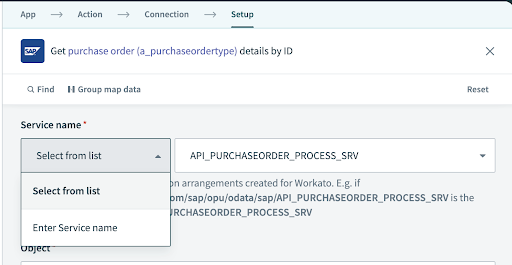

Catalog service support in SAP OData connector

We continue to invest in our SAP connectivity, including our SAP OData connector. Previously, you would need to know the internal technical names of the OData service you wished to use, and manually enter it into the action configuration. Gone are those days with Workato!

You can now conveniently choose available OData services from a dropdown menu, eliminating the need to dive through SAP documentation for the services and permissions required.

Audit log streaming error notifications and retry

Ensuring audit logs are present and accurate is critical for governance. Streaming of these logs can be disrupted by a variety of things, namely misconfigured connections, temporary network errors, and streaming destinations that are no longer available to Workato. What does Workato do to ensure you have the audit logs you expect?

Error Notification

Email notifications are sent when intervention is needed to fix the issue, such as:

- Voluntary disconnections – if the streaming connection is disconnected by a user

- Involuntary disconnections – if Workato detects that we no longer have access to send logs to the destination application

- Destination specific errors – for all of our supported streaming destinations, there are errors that will trigger a notification

Retry Mechanism

In the event your log streaming fails, our platform will attempt to resend the logs over a period of 7 days, with approximately 7-8 attempts during that period. After seven days of failed attempts to stream the audit log to its destination, Workato considers it a failed event. Generally, errors are resolved quickly, and Workato sends the log successfully in fewer attempts.

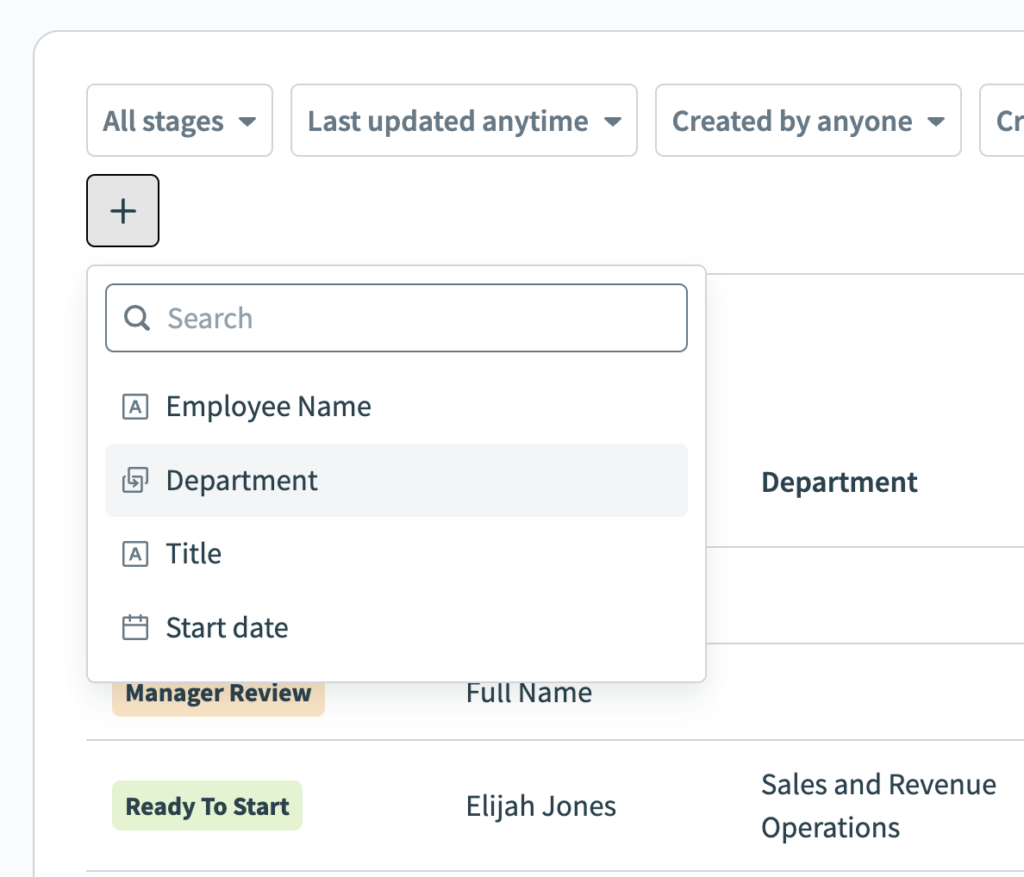

See the workflow requests that matter most to you with filter views

While in a Workflow App, you can now filter the list of requests by any column that’s visible to your role for that app. For example, customer success teams in an customer onboarding app could filter down to new accounts receiving the Platinum onboarding package to prioritize their time.

Additionally, any filters applied are saved in the URL parameters, so you can bookmark your favorite views to jump back to them quickly!

A new visual experience on docs

Our docs got a visual and organizational upgrade! Check out the new home page, and jump into your favorite area of the platform.